Overview

Overview

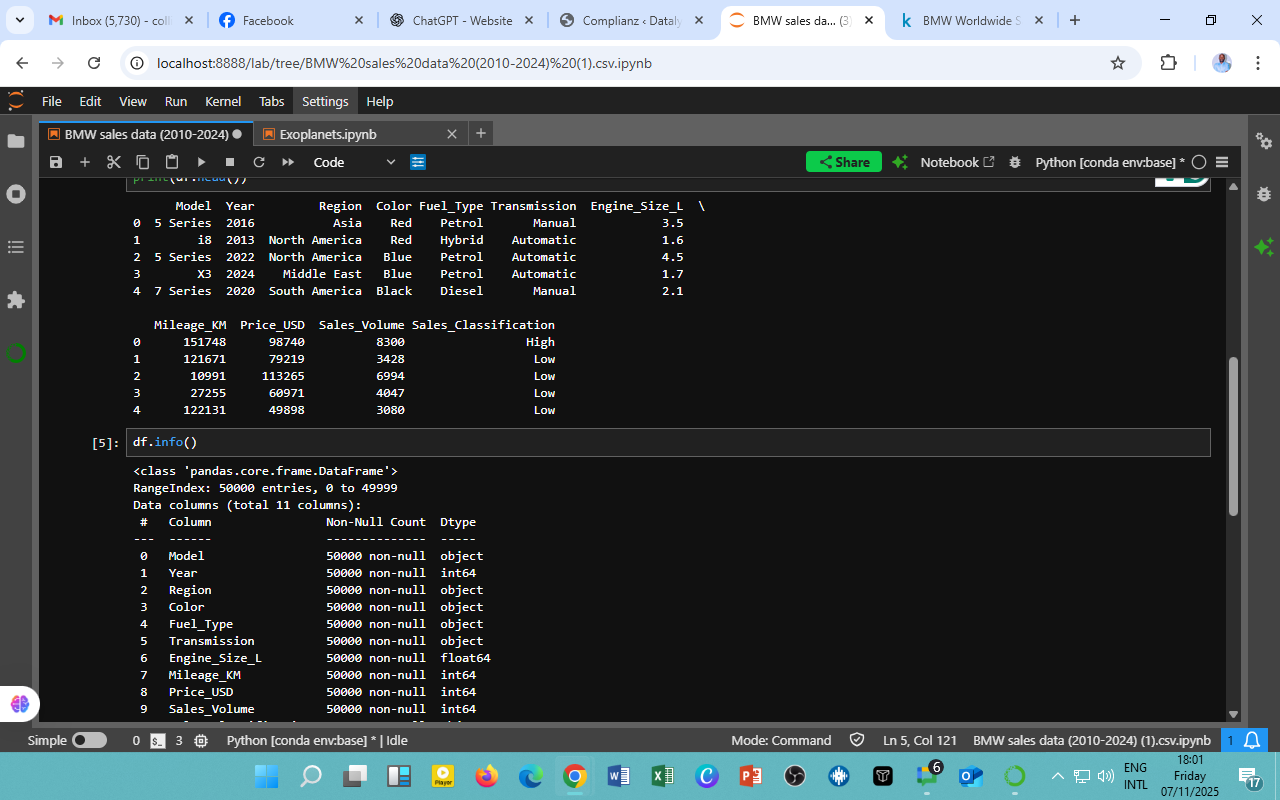

This section examines BMW’s sales performance across four major fuel categories, Diesel, Petrol, Hybrid, and Electric, from 2010 to 2024. The analysis reveals how shifts in technology, policy, and consumer behavior shaped BMW’s sales mix over time.

Findings

The time-series plot above shows fluctuating yet competitive sales volumes across all fuel types. On average, annual sales hovered between 4.0 and 4.7 million units, indicating BMW’s sustained global market presence despite economic shocks and changing fuel trends.

Key Observations:

-

Diesel: Sales remained strong in the early 2010s but showed volatility after 2016, reflecting tightening European emission regulations and diesel-phaseout campaigns.

-

Petrol: Maintains steady performance, often used as BMW’s baseline product line; spikes appear around 2014, 2018, and 2022—periods associated with new model launches.

-

Hybrid: Gradual and significant growth post-2015, peaking around 2023–2024, aligning with global sustainability goals and policy incentives.

-

Electric: Rapid climb beginning in the mid-2010s, consolidating as a core growth area by 2024, evidence of BMW’s strategic pivot toward electrification (notably the i3, i4, and iX series).

Interpretation

The sales volatility across years reflects both macroeconomic disruptions (COVID-19, supply chain shocks) and technological transitions within the automotive sector. However, the sustained rise of hybrid and electric vehicles indicates consumer acceptance of BMW’s green mobility strategy and the company’s ability to adapt to policy-driven market evolution.

Key Insights

-

Strategic Transition: BMW’s balanced fuel portfolio enabled it to navigate uncertainty while investing in hybrid and electric R&D.

-

Market Maturity: Diesel and petrol variants contribute a major share, but their long-term decline is evident.

-

Policy Link: Global environmental regulations and carbon-credit frameworks clearly correlate with the rising sales of hybrid and electric vehicles.

-

Forward Outlook: BMW’s 2030+ targets for fully electric production appear realistic, given the sales acceleration observed since 2020.

Acknowledgments

-

Data Source: BMW Sales Data (2010–2024), prepared within the DatalytIQs Academy Analytics Framework.

-

Tools & Methods: Python (pandas, matplotlib, seaborn) — grouped by

YearandFuel_Typefor sales trend visualization. -

Contributors:

-

Collins Odhiambo Owino — Lead Analyst & Author, DatalytIQs Academy

-

Kaggle Open Automotive Datasets — Data structuring and reference support

-

BMW Group Annual & Sustainability Reports — Contextual insights on electrification strategy

-

Author’s Note

Written by Collins Odhiambo Owino

Founder & Lead Researcher, DatalytIQs Academy

Empowering learners and professionals in Mathematics, Economics, and Finance through data-driven insight.

You must be logged in to post a comment.